I really do enjoy a good design challenge, regardless of medium. But there’s challenges, and then there’s the vergence-accommodation conflict (VAC), which is the single most shitty, unavoidable side-effect of how VR headsets are designed.

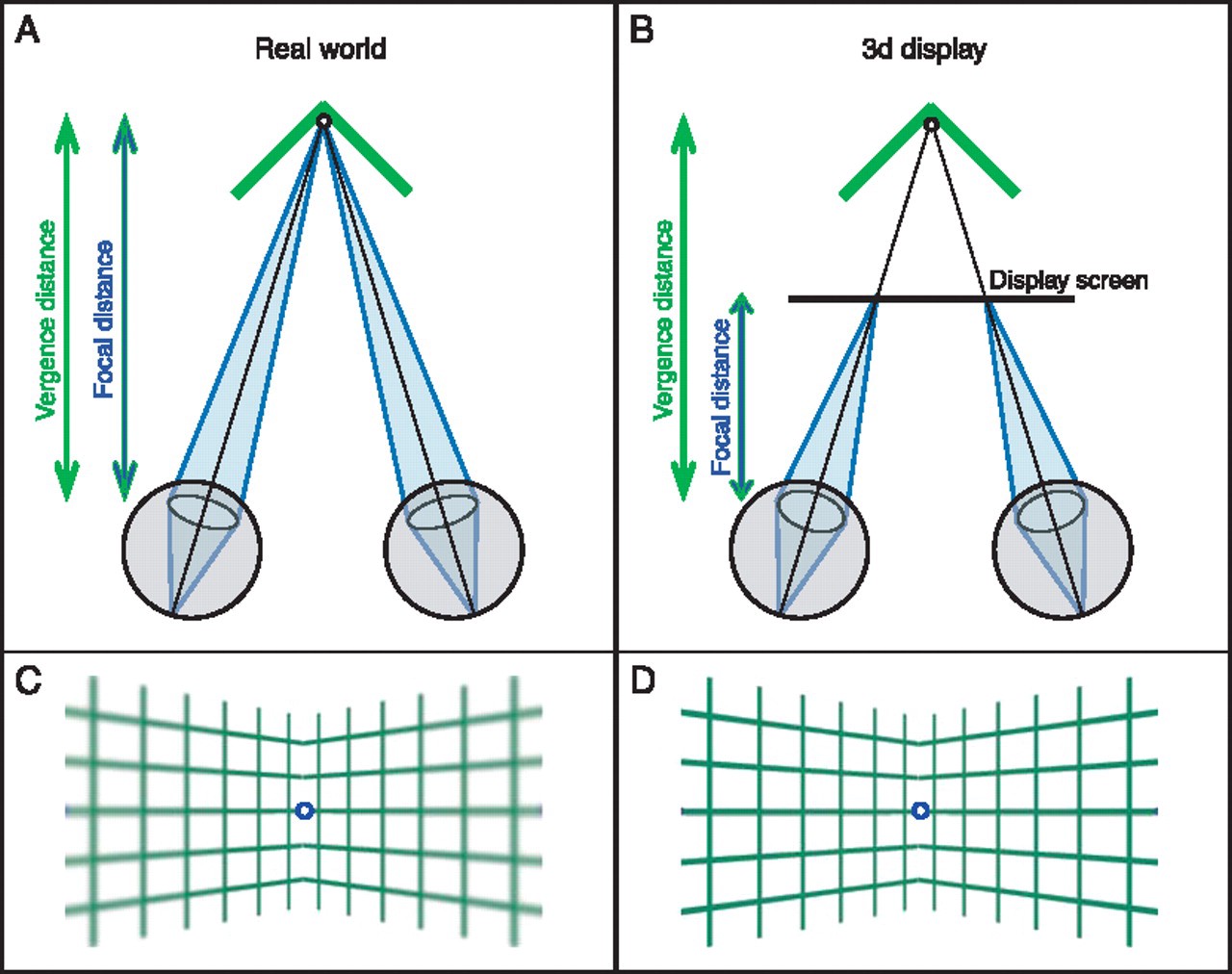

In a nutshell: the way that the lenses of your eyes focus on an object is totally separate from the way that your eyes physically aim themselves at the object you’re trying to focus on. In the image below, A & B are depictions explaining what our weird human eyeballs are doing when we're in VR, and C & D are simulations of how we experience depth when in the real world vs in a VR HMD:

Above image as found in "Vergence-accommodation conflicts hinder visual performance and cause visual fatigue."

Above image as found in "Vergence-accommodation conflicts hinder visual performance and cause visual fatigue."

What complicates things further is how long it takes your eyes to adjust their vergence in order to look from near-field to far-field. You can actually watch how slow vergence and accommodation take to work together. Try it out for yourself and see how long it takes your eyes to adjust:

- Go outside or sit in front of a window, anywhere you’ll be able to look out to the horizon (infinity).

- Hold your index finger up 3–4 inches from your eyes and focus on it — this should cross your eyes considerably.

- Quickly shift your gaze from your finger and look out far away into the distance.

It should have taken your eyes a noticeably long amount of time to adjust to the new focal point — hefty vergence movements take the eyes a second or more, much longer than the fractions of a second it takes our eyes to make saccade movements (looking back and forth, scanning the environment).

So what’s the VR problem? Current-gen HMDs for virtual reality are a flat square screen inside a pair of goggles that simulate depth of field. There is a disparity between the physical surface of the screen (vergence) and the focal point of the simulated world you’re staring at (accommodation).

Virtual reality headsets like the Rift or the Vive ask you to stare at a screen literally inches from your eyes, but also focus on a point in the simulated world that’s much further away, when human eyes normally do both to one single fixed point. In fact, your brain is so used to performing both of these physiological mechanisms at the same time toward the same fixed points that activating one of them instinctively triggers the other.

All kinds of bad, nasty things happen when people are asked to separate the two: discomfort and fatigue which can cause users to end their sessions early, headaches that persist for hours afterward, even nausea in certain people (though it is oftentimes hard to separate out VAC from all of the other things that make people sick).

Quickly moving objects closer to or further away from the user can fatigue eyes faster.

So it’s in everyone’s best interests to figure out how to solve this problem, whether it’s hardware changes, light fields, or writing code to account for these kinds of effects. I’m not qualified to go into much detail on the programming side of things, but there are some pretty clever developers & academics actively trying to solve this on the software side.

Since we’re stuck with the hardware we’ve got for now, and the software approach is still TBD, we have a band-aid solution in design. Fortunately, there are some good best practices in virtual reality UX design that we can use to reduce or avoid VAC-induced discomfort. The following are some solutions presented by Hoffman et al in their paper located here, combined with my own experiences:

Use long viewing distances when possible — focus cues have less influence as the distance to the display increases. Beyond 1 meter should suffice.

Match the simulated distance in the display and focal distance as well as possible. That means placing in-game objects that are being examined or interacted with in the same range as the simulated static focal point.

Move objects in and out of depth at a pace that gives the user’s eyes more time to adjust. Quickly moving objects closer to or further away from the user can fatigue eyes faster.

Maximize the reliability of other depth cues. Accurate perspective, shading realism, and other visual cues that convey realistic depth help take a cognitive load off brains already coping with VAC. Note: simulated blur falls into this category, but current approaches to blur effects tend to exacerbate the negative impacts of VAC, ymmv.

Minimize the consequences of VAC by making existing conflicts less obvious. Try not to stack multiple smaller objects at widely-varying depths overlapping each other, especially not when your users will be viewing them head-on for an extended period of time. Also try to increase the distance to the virtual scene whenever possible.

These best practices won’t solve the problem entirely, but they’ll definitely make a difference in the comfort and stamina of your users.

Further reading:

Resolving the Vergence-Accommodation Conflict in Head Mounted Displays by Gregory Kramida and Amitabh Varshney

COMMENTS